How Many Earthquake Records to Use for Design

Abstract

The analysis of structures is a fundamental part of seismic design and assessment. It began more than a 100 years ago, when static analysis with lateral loads of about 10% of the weight of the structure was adopted in seismic regulations. For a long time seismic loads of this size remained in the majority of seismic codes worldwide. In the course of time, more advanced analysis procedures were implemented, taking into account the dynamics and nonlinear response of structures. In the future methods with explicit probabilistic considerations may be adopted as an option. In this paper, the development of seismic provisions as related to analysis is summarized, the present state is discussed, and possible further developments are envisaged.

Introduction: as simple as possible but not simpler

Seismic analysis is a tool for the estimation of structural response in the process of designing earthquake resistant structures and/or retrofitting vulnerable existing structures. In principle, the problem is difficult because the structural response to strong earthquakes is dynamic, nonlinear and random. All three characteristics are unusual in structural engineering, where the great majority of problems are (or at least can be adequately approximated as) static, linear and deterministic. Consequently, special skills and data are needed for seismic design, which an average designer does not necessarily have.

Methods for seismic analysis, intended for practical applications, are provided in seismic codes. (Note that in this paper the term "code" is used broadly to include codes, standards, guidelines, and specifications.) Whereas the most advanced analytical, numerical and experimental methods should be used in research aimed at the development of new knowledge, the methods used in codes should, as Albert Einstein said, be "as simple as possible, but not simpler". A balance between required accuracy and complexity of analysis should be found, depending on the importance of a structure and on the aim of the analysis. It should not be forgotten that the details of the ground motion during future earthquakes are unpredictable, whereas the details of the dynamic structural response, especially in the inelastic range, are highly uncertain. According to Aristotle, "it is the mark of an educated mind to rest satisfied with the degree of precision which the nature of the subject admits and not to seek exactness where only an approximation is possible" (Nicomachean Ethics, Book One, Chapter 3).

After computers became widely available, i.e. in the late 1960s and in 1970s, a rapid development of procedures for seismic analysis and supporting software was witnessed. Nowadays, due to tremendous development in computing power, numerical methods, and software, there are almost no limits related to computation. Unfortunately, knowledge about ground motion and structural behaviour, especially in the inelastic range, has not kept up the same speed. Also, we cannot expect that, in general, the basic capabilities of engineers will be better than in the past. So, there is a danger, as M. Sozen wrote already in 2002: "Today, ready access to versatile and powerful software enables the engineer to do more and think less." (M. Sozen, A Way of Thinking, EERI Newsletter, April 2002.) Two other giants in earthquake engineering also made observations which have remained valid up to now. R. Clough, one of the fathers of the finite element method, stated: "Depending on the validity of the assumptions made in reducing the physical problem to a numerical algorithm, the computer output may provide a detailed picture of the true physical behavior or it may not even remotely resemble it" (Clough 1980, p. 369). Bertero (2009, p. 80) warned: "There are some negative aspects to the reliance on computers that we should be concerned about. It is unfortunate that there has been a trend among the young practicing engineers who are conducting structural analysis, design, and detailing using computers to think that the computer automatically provides reliability". Today it is lack of reliable data and the limited capabilities of designers which represent the weak link in the chain representing the design process, rather than computational tools, as was the case in the past.

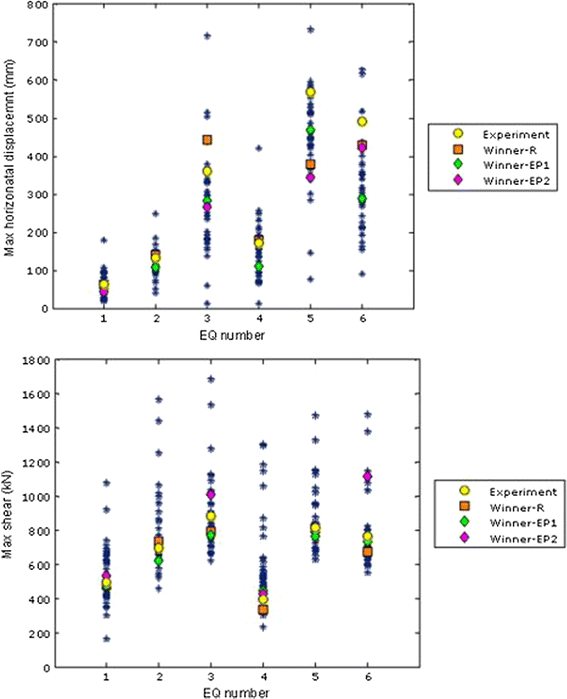

An indication of the restricted ability of the profession (on average) to adequately predict the seismic structural response was presented by the results of a blind prediction contest of a simple full-scale reinforced concrete bridge column with a concentrated mass at the top, subjected to six consecutive unidirectional ground motions. A description of the contest, and of the results obtained, described in the following text, has been summarized from Terzic et al. (2015). The column was not straightened or repaired between the tests. During the first ground motion, the column displaced within its elastic range. The second test initiated a nonlinear response of the column, whereas significant nonlinearity of the column response was observed during the third test. Each contestant/team had to predict peak response for global (displacement, acceleration, and residual displacement), intermediate (bending moment, shear, and axial force), and local (axial strain and curvature) response quantities for each earthquake. Predictions were submitted by 41 teams from 14 different countries. The contestants had either MSc or PhD degrees. They were supplied with data about the ground motions and structural details, including the complete dimensions of the test specimen, and the mechanical one-dimensional properties of the steel and concrete. In this way the largest sources of uncertainties, i.e. the characteristics of the ground motion and the material characteristics, were eliminated. The only remaining uncertainty was related to the modelling and analysis of the structural response. In spite of this fact, the results showed a very wide scatter in the blind predictions of the basic engineering response parameters. For example, the average coefficient of variation in predicting the maximum displacement and acceleration over the six ground motions was 39 and 48%, respectively. Biases in median predicted responses were significant, varying for the different tests from 5 to 35% for displacement, and from 25 to 118% for acceleration. More detailed results for the maximum displacements at the top of the column and the maximum shear forces at the base of the column are presented in Fig. 1. A large dispersion of the results can be observed even in the case of the elastic (Eq. 1) and nearly elastic (Eq. 2) structural behaviour.

Predictions of maximum horizontal displacements at the top of the column and maximum base shears versus measured values (Terzic et al. 2015)

Full size image

The results of the blind prediction contest clearly demonstrate that the most advanced and sophisticated models and methods do not necessarily lead to adequate results. For example, it was observed that a comparable level of accuracy could be achieved if the column was modelled either with complex force-based fibre beam-column elements or with simpler beam-column elements with concentrated plastic hinges. Predictions of structural response greatly depended on the analyst's experience and modelling skills. Some of the results were completely invalid and could lead to gross errors if used in design. A simple check, e.g. with the response spectrum approach applied for a single-degree-of-freedom system, would indicate that the results were nonsensical.

This paper deals with analysis procedures used in seismic provisions. The development of seismic provisions related to the analysis of building structures is summarized, the present state is discussed, and possible further developments are envisaged. Although, in general, the situation in the whole world is discussed, in some cases emphasis is placed on the situation in Europe and on the European standard for the design of structures for earthquake resistance Eurocode 8 (CEN 2004), denoted in this paper as EC8. The discussion represents the views of the author and is based on his experience in teaching, research, consulting, and code development work.

History: domination of the equivalent static procedure and introduction of dynamics

Introduction

Earthquake engineering is a relatively young discipline, "it is a twentieth century development" (Housner 1984). Although some types of old buildings have, for centuries, proved remarkably resistant to earthquake forces, their seismic resistance has been achieved by good conceptual design without any seismic analysis. Early provisions related to the earthquake resistance of buildings, e.g. in Lima, Peru (Krause 2014) and Lisbon, Portugal (Cardoso et al. 2004), which were adopted after the disastrous earthquakes of 1699 and 1755, respectively, were restricted to construction rules and height limitations. It appears that the first engineering recommendations for seismic analysis were made in 1909 in Italy. Apparently Housner considered this date as the starting date of Earthquake Engineering.

The period up to 1978 was dominated by the equivalent static procedure. "The equivalent static method gradually spread to seismic countries around the world. First it was used by progressive engineers and later was adopted by building codes. Until the 1940s it was the standard method of design required by building codes" (Housner 1984), and still today it is widely used for simple regular structures, with updated values for the seismic coefficients. "This basic method has stood the test of time as an adequate way to proportion the earthquake resistance of most buildings. Better methods would evolve, but the development of an adequate seismic force analysis method stands out in history as the first major saltation or jump in the state of the art." (Reitherman 2012, p. 174). From the three basic features of seismic structural response, dynamics was the first to be introduced. Later, inelastic behaviour was approximately taken into account by the gradation of seismic loads for different structural systems, whereas randomness was considered implicitly by using various safety factors.

In the following sections we will summarize the development of seismic analysis procedures in different codes (see also Table 1). It will be shown that, initially, the equivalent static approach was used. With some exceptions, for several decades the seismic coefficient mostly amounted to about 0.1.

Full size table

Dynamic considerations were introduced by relating the seismic coefficient to the period of the building, indirectly via the number of storeys in 1943, and directly in 1956. The modal response spectrum method appeared for the first time in 1957. The impact of the energy dissipation capacity of structures in the inelastic range (although this was not explicitly stated in the code) was taken into account in 1959. Modern codes can be considered to have begun with the ATC 3-06 document "Tentative provisions for the development of seismic regulations for buildings", which was released in 1978 (ATC 1978). This document formed the basis upon which most of the subsequent guidelines and regulations were developed both in the United States and elsewhere in the world.

When discussing seismic code developments, the capacity design approach developed in the early 1970s in New Zealand, should not be ignored. It might be one of the most ingenious solutions in earthquake engineering. Structures designed by means of the capacity design approach are expected to possess adequate ductility both at the local and global level. In the case of such structures, it is completely legitimate to apply linear analysis with a force reduction factor which takes into account the energy dissipation capacity. Of course, a quantification of the inelastic behaviour is not possible. Since capacity design is not a direct part of the analysis process, it will not be further discussed in this paper.

Italy

After the earthquake in Messina in 1908, a committee of Italian experts (nine practicing engineers and five university professors) was formed. The committee prepared a quantitative recommendation for seismic analysis which was published in 1909. The committee proposed, mainly based on the results of a study of three timber-framed buildings which had survived the earthquake with little or no damage, that, "in future, structures must be so designed that they would resist, in the first story, a horizontal force equivalent to 1/12th of the weight above, and in the second and third story, 1/8th of the weight above" (Freeman 1932, p. 577). The procedure became mandatory through a Royal Decree in the same year (Sorrentino 2007). At that time, three-story buildings were the tallest permitted. In the committee report it was stated "that it was reasonable to add 50% to the seismic force to be provided against in upper stories, because of the observed greater amplitude of oscillation in tall as compared with low buildings and also in adherence to the universally admitted principle that the center of gravity of buildings should be as low as possible, and hence the upper stories should be lighter than the lower" (Freeman 1932, p. 577). According to Reitherman (2012, p. 193), the above-described technical regulation was later adjusted to provide factors of 1/8 and 1/6, respectively, after the disastrous 1915 Avezzano Earthquake, to the north of the Strait of Messina.

The committee's proposal for the determination of lateral forces actually introduced the seismic coefficient, which is used to multiply the weight of the building in order to obtain the total seismic force (the base shear force). The seismic coefficient enables performing a seismic analysis by means of the equivalent static method. It has a theoretical basis. According to D'Alembert's principle, a fictitious inertia force, proportional to the acceleration and mass of a particle, and acting in the direction opposite to the acceleration, can be used in a static analysis, in order to simulate a dynamic problem. The seismic coefficient represents the ratio between the acceleration of the mass and the acceleration of gravity. The acceleration of the mass depends on the acceleration of the ground and on the dynamic characteristics of the structure. At the beginning of the twentieth century there were no data about ground accelerations and the use of structural dynamics was, at that time, therefore infeasible. M. Panetti, who appears to be the main author of the recommendation of seismic coefficient in the report after the Messina earthquake, recognized "that the problem was really one of dynamics or kinetics, and that to solve this would involve such complication that one must have recourse to the assumption that a problem of statics could be so framed as to provide for safety" (Freeman 1932, p. 577). So, according to Freeman, the proposed seismic coefficient, as well as the seismic coefficients used during the following decades in California and Japan, were "largely a guess tempered by judgement". Nevertheless, it is interesting to note that the order of magnitude of the seismic coefficient proposed in 1909 remained in seismic codes in different countries for many decades (see Sect. 2.6).

The most advanced of the engineers who studied the effects of the 1908 Messina earthquake was A. Danusso. He "was probably the first to propose a dynamic analysis method rather than static lateral force analysis method and, possibly for the first time in earthquake engineering, he stated that seismic demand does not depend upon the ground motion characteristics alone" (Sorrentino 2007). Danusso not only stated this relationship verbally, but presented his thinking mathematically. Danusso's approach was considered too much ahead of its time for practical implementation by the committee formed after the Messina earthquake, which found the static approach more practical for widespread application (Reitherman 2012, p. 193). As a matter of historical fact, dynamic analysis has not been implemented in seismic provisions for the next half of the century.

The committee's proposal to conventionally substitute dynamic actions with purely static ones in representing seismic effects had a great impact on the subsequent development of early earthquake engineering in Italy, since it simplified the design procedures but ruled out from the code any dynamic considerations until the mid-seventies (1974), when a design spectrum was introduced (Sorrentino 2007) and an up-to-date seismic code was adopted.

The Italians were the first to propose, in 1909, the equivalent static procedure for seismic analysis, and to implement it in a code. The procedure has been a constituent part of practically all seismic codes up until present times. Also, apparently the first dynamic seismic computations stem from Italy. However, their achievements did not have a widespread effect worldwide. It was the Japanese achievements, as described in the next section, which became more popular.

Japan

In Japan, in 1916 R. Sano proposed the use of seismic coefficients in earthquake resistant building design. "He assumed a building to be rigid and directly connected to the ground surface, and suggested a seismic coefficient equal to the maximum ground acceleration normalized to gravity acceleration. Although he noted that the lateral acceleration response might be amplified from the ground acceleration with lateral deformation of the structure, he ignored the effect in determining the seismic coefficient. He estimated the maximum ground acceleration in the Honjo and Fukagawa areas on alluvial soil in Tokyo to be 0.30 g and above on the basis of the damage to houses in the 1855 Ansei-Edo (Tokyo) earthquake, and that in the Yamanote area on diluvial hard soil to be 0.15 g" (Otani 2004). T. Naito, one of Sano's students at the University of Tokyo, became, like Sano, not just a prominent earthquake engineer but also one of the nation's most prominent structural engineers. He followed Sano's seismic coefficient design approach, usually using a coefficient of 1/15 (7%) to design buildings before the 1923 Kanto Earthquake. The coefficient was uniformly applied to masses up the height of the building (Reitherman 2012, p. 168).

The first Japanese building code was adopted in 1919. The 1923 Great Kanto earthquake led to the addition of an article in this code (adopted in 1924) to require the seismic design of all buildings for a seismic coefficient of 0.1. "From the incomplete measurement of ground displacement at the University of Tokyo, the maximum ground acceleration was estimated to be 0.3 g. The allowable stress in design was one third to one half of the material strength in the design regulations. Therefore, the design seismic coefficient 0.1 was determined by dividing the estimated maximum ground acceleration of 0.3 g by the safety factor of 3 of allowable stress" (Otani 2004).

The Japanese code, which applied to certain urban districts, was a landmark in the development of seismic codes worldwide. In the Building Standard Law, adopted in 1950, applicable to all buildings throughout Japan, the seismic coefficient was increased from 0.1 to 0.2 for buildings with heights of 16 m and less, increasing by 0.01 for every 4 m above (Otani 2004). Allowable stresses under temporary loads were set at twice the allowable stresses under permanent loads. From this year on, the seismic coefficients in Japan remained larger than elsewhere in the rest of the world. Later significant changes included the abolishment of the height limit of 100 ft in 1963. In 1981 the Building Standard Law changed. Its main new features included a seismic coefficient which varied with period, and two-level design. The first phase design was similar to the design method used in earlier codes. It was intended as a strength check for frequent moderate events. The second phase design was new. It was intended as a check for strength and ductility for a maximum expected event (Whittaker et al. 1998). It is interesting to note that, in Japan, the seismic coefficient varied with the structural vibration period only after 1981.

United States

Surprisingly, the strong earthquake which hit San Francisco in 1906 did not trigger the development of seismic provisions in the United States. Wind force was intended to protect buildings against both wind and earthquake damage. Design recommendations were intended only for buildings taller than 100 feet (30.5 m), or taller than three times the building's least dimension, and consisted of applying a 30 psf (1.44 kPa) wind load to the building's elevation. Later, the recommended wind load was reduced to 20 psf, and then to 15 psf (Diebold et al. 2008). At that time, however, no building code provisions existed for the design of shorter structures to resist wind or earthquake loads.

The first regulations on earthquake-resistant structures appeared in the United States only in 1927, after the earthquake in Santa Barbara in 1925. The provisions were contained in an appendix to the Uniform Building Code, and were not mandatory. The equivalent static procedure was used. The seismic coefficient was varied between 7.5 and 10% of the total dead load plus the live load of the building, depending on soil conditions. This was the first time that a building code had recognized the likelihood of the amplification of ground motion by soft soil (Otani 2004). After the 1925 Santa Barbara earthquake, some California municipalities did adopt mandatory seismic provisions in their building codes.

The first mandatory seismic codes used in the United States were published in 1933, after the 1933 Long Beach earthquake, which caused extensive damage to school buildings. Two California State Laws were enacted. The Field Act required the earthquake-resistant design and construction of all new public schools in California. The adopted regulations required that masonry buildings without frames be designed to resist a lateral force equal to 10% of the sum of the dead load and a fraction of the design live load. For other buildings the seismic coefficient was set at 2–5%. Shortly after this, the Riley Act was adopted with a mandatory seismic design coefficient of 2% of the sum of the dead load and live load for most buildings. At about the same time, in Los Angeles a lateral force requirement of 8% of the total weight and half of the design live load was imposed. This requirement was also adopted by the 1935 Uniform Building Code for the zone of highest seismicity (Berg 1983, pp. 25–26), whereas, recognizing the different levels of seismic risk in different regions, the seismic coefficients in the other two zones were lower.

Although the first regulations were based on the static concept, and did not take into account the fact that the structural acceleration (and thus the seismic load) depends on the vibration period of the building, the developers of the early codes were aware of the dynamic nature of the structural response and of the importance of the periods of vibration of buildings. Freeman wrote: "Those who have given the matter careful study recognize that as a means of lessening the amplitude of oscillation due to synchronism [i.e. resonance], it is extremely important to make the oscillation period of the building as small as practicable, or smaller than the oscillation period of the ground ordinarily found in great earthquakes." (Freeman 1932, p. 799). However, since the details of the ground motion, including the frequency content, were not known, any realistic quantitative considerations were impossible. The first generation of analysis methods evolved before being able to take into account response spectrum analysis and data from strong motion instruments, although the basic concept was known. Some of the most educated guesses of the frequency content of ground shaking were completely wrong considering today's knowledge. For example, Freeman (1930, p. 37) stated that "In Japan it has been noted that the destructive oscillations of the ground in an earthquake are chiefly those having a period from 1 to 1.5 s; therefore, some of the foremost Japanese engineers take great care in their designs to reduce the oscillation period of a building as nearly as practicable to from 0.5 to 0.6 s, or to less than the period of the most destructive quake, and also strive to increase the rigidity of the building as a whole in every practical way." Today, we know that, generally, in the 0.5 to 0.6 s range the spectral acceleration is usually larger than in the range from 1 to 1.5 s.

The first code, which related the seismic coefficient to the flexibility of the building, was enacted by the City of Los Angeles, in 1943. The level of the seismic coefficient was decreasing with the number of stories, reflecting the decrease of structural acceleration by increasing the flexibility and the natural period of the building. So, the building period was considered indirectly. This code also took into account the fact that the lateral force varies over the height of the structure. The building period became an explicit factor in the determination of seismic design forces only in 1956, when the City of San Francisco enacted a building code based on the recommendations produced by a joint committee of the Structural Engineers Association of Northern California and the San Francisco Section of the American Society of Civil Engineers (Berg 1983, pp. 26–27), published in 1951. The recommendations included an inverted triangle distribution of design lateral loads along the height of the building, which is still a basic feature of equivalent static lateral force procedures.

A theoretical basis for the consideration of the dependence of seismic load on the natural period of the building was made possible by the development of response spectra. Although the initial idea of the presentation of earthquake ground motion with spectra had appeared already in the early 1930s, the practical use of spectra has not been possible for over 30 years due to the lack of data about the ground motion during earthquakes, and because of the very extensive computational work required for the determination of spectra, which was virtually impossible without computers. For details on the historical development of response spectra, see, e.g. Chopra (2007) and Trifunac (2008).

The first code which took into account the impact of the energy dissipation capacity of structures in the inelastic range (although this was not explicitly stated in the code) was the SEAOC model code (the first "Blue Book"), in 1959. In order to distinguish between the inherent ductility and energy dissipation capacities of different structures, a coefficient K was introduced in the base shear equation. Its values were specified for four types of building construction. The basic seismic coefficient of 0.10 increased the most (by a factor of 1.33) in the case of wall structures, and decreased the most (by a factor of 0.67) in the case of space frames. According to Blume et al. (1961): "The introduction of K was a major step forward in code writing to provide in some degree for the real substance of the problem—energy absorption—and for the first time to recognize that equivalent acceleration or base shear coefficient C alone is not necessarily a direct index of earthquake resistance and public safety."

Seismic regulations at that time were mainly limited to analysis, and did not contain provisions on dimensioning and detailing. It was not until the end of the 1960s that, in the United States, provisions related to the detailing of ductile reinforced concrete frames were adopted.

An early appearance of Performance-Based Seismic Design can be found in the 1967 edition of the SEAOC Blue Book, where the following criteria were defined:

- 1.

Resist minor earthquakes without damage.

- 2.

Resist moderate earthquakes without structural damage, but with some nonstructural damage.

- 3.

Resist major earthquakes, of the intensity of severity of the strongest experienced in California, without collapse, but with some structural as well as nonstructural damage.

The first document, which incorporated most of the modern principles of seismic analysis, was ATC 3-06 (ATC 1978), which was released in 1978 (Fig. 2) as a result of years of work performed by many experts in the United States. As indicated by the title of the document, the document represented tentative provisions for the development of seismic regulations for buildings. Its primary purpose was to present the current state of knowledge in the fields of engineering seismology and earthquake engineering, in the form of a code. It contained a series of new concepts that significantly departed from existing regulations, so the authors explicitly discouraged the document's use as a code, until its usefulness, practicality, and impact on costs was checked. Time has shown that the new concepts have been generally accepted, and that the document was a basis for the subsequent guidelines and regulations in the United States and elsewhere in the world, with some exception in Japan.

In the document the philosophy of design in seismic regions, set forth in the 1967 SEAOC Blue Book, was followed, according to which the primary purpose of seismic regulations is the protection of human life, which is achieved by preventing the collapse of buildings or their parts, whereas in strong earthquakes damage and thus material losses are permitted. At the very beginning it was stated: "The design earthquake motions specified in these provisions are selected so that there is low probability of their being exceeded during the normal lifetime expectancy of the building. Buildings and their components and elements … may suffer damage, but should have a low probability of collapse due to seismic-induced ground shaking."

The document contained several significant differences compared to earlier seismic provisions. Seismic hazard maps, which represent the basis for design seismic loads, were based on a 90% probability that the seismic hazard, represented by the effective peak ground acceleration and the effective peak ground velocity (which serve as factors for constructing smoothed elastic response spectra), would not be exceeded in 50 years. This probability corresponds to a return period of the earthquake of 475 years. Buildings were classified into seismic hazard exposure groups. Seismic performance categories for buildings with design and analysis requirements depended on the seismicity index and the building seismic hazard exposure group. The analysis and design procedures were based upon a limit state that was characterized by significant yield rather than upon allowable stresses as in earlier codes. This was an important change which influenced also the level of the seismic coefficient.

An empirical force reduction factor R, called the "Response modification factor", was also introduced in ATC 3-06. Experience has shown that the great majority of well designed and constructed buildings survive strong ground motions, even if they were in fact designed for only a fraction of the forces that would develop if the structure behaved entirely as linearly elastic. A reduction of seismic forces is possible thanks to the beneficial effects of energy dissipation in ductile structures and inherent overstrength. Although the influence of the structural system and its capacity for energy dissipation has been recognized already in late 1950s, the force reduction factor (or simply R factor) in the current format was first proposed in ATC-3-06. Since then, R factor has been present, in various forms, in all seismic regulations (in the European standard EC8 it is called the behaviour factor q).

R factor allows, in a standard linear analysis, an approximate consideration of the favourable effects of the nonlinear behaviour of the structure, and therefore presents a very simple and practical tool for seismic design. However, it is necessary to bear in mind that describing a complex phenomenon of inelastic behaviour for a particular structure, by means of a single average number, can be confusing and misleading. For this reason, the R factor approach, although is very convenient for practical applications and has served the professional community well over decades, is able to provide only very rough answers to the problems encountered in seismic analysis and design. Also, it should be noted that "the values of R must be chosen and used with judgement", as stated in the Commentary to the ATC 03-6 document in Sect. 3.1. According to ATC-19 (ATC 1995), "The R factors for the various framing systems included in the ATC-3-06 report were selected through committee consensus on the basis of (a) the general observed performance of like buildings during past earthquakes, (b) estimates of general system toughness, and (c) estimates of the amount of damping present during inelastic response. Thus, there is little technical basis for the values of R proposed in ATC-3-06." Nevertheless, the order of magnitude of R factors (1.5 to 8, related to design at the strength level) has been widely used in many codes and has remained more or less unchanged until nowadays.

The model code recognized the existence of several "standard procedures for the analysis of forces and deformations in buildings subjected to earthquake ground motion", including Inelastic Response History Analysis. However, only two analysis procedures were specified in the document: Equivalent Lateral Force Procedure and Modal Analysis Procedure with one degree of freedom per floor in the direction being considered. In relation to modal analysis, it was stated in the Commentary in Sect. 3.5: "In various forms, modal analysis has been widely used in the earthquake-resistant design of special structures such as very tall buildings, offshore drilling platforms, dams, and nuclear power plants, but this is the first time that modal analysis has been included in the design provisions for buildings". The last part of this statement was true for the United States, but not worldwide, since modal analysis was adopted already in 1957 in the USSR's seismic code, as explained in the next section. In the Commentary it was recognized that the simple model used for modal analysis was "likely to be inadequate if the lateral motions in two orthogonal directions and the torsional motion are strongly coupled". In such a case and in some other cases, where the simple model was not adequate, the possibility of a "suitable generalization of the concepts" was mentioned.

Despite the tremendous progress which has been made in the field of earthquake engineering in recent decades, it can be concluded that the existing regulations, which of course contain many new features and improvements, are essentially still based on the basic principles that were defined in the ATC 3-06 document, with the partial exception of the United States, where the updating of seismic provisions has been the fastest.

The cover-page of ATC 3-06

Full size image

Other countries

At the beginning of 1930s, seismic codes were instituted only in Italy and Japan, and in a few towns in California. As mentioned in the previous section, the first mandatory seismic codes used in the United States were adopted in 1933. By the end of that decade, seismic codes were enacted in three more countries, New Zealand in 1935 (draft code in 1931), India in 1935, and Chile in 1939 (provisional regulations in 1930). In all cases a damaging earthquake triggered the adoption of seismic regulations (Reitherman 2012, p. 200 and p. 216). Seismic codes followed later in Canada in 1941 (Appendix to the National Building Code), Romania in 1942, Mexico in 1942, and Turkey in 1944 (a post-event response code had been adopted already in 1940.) The USSR's first seismic code was adopted in 1941 (Reitherman 2012, p. 288). The Standards and Regulations for Building in Seismic Regions, which was adopted in the USSR in 1957, included, apparently as the first code, the modal response spectrum method as the main analysis procedure (Korčinski 1964). This analysis procedure was later included in several European seismic codes and has remained the most popular procedure for seismic analysis in Europe, up until the present day. In EC8, too, it is the basic analysis method. In 1963 and 1964, respectively, Slovenia and the whole of the former Yugoslavia adopted seismic codes, which were with respect to the analysis method similar to the Soviet code. In China, the first code was drafted in 1955, but was not adopted (Reitherman 2012, p. 288). The first official seismic design code (The Code for the Seismic Design of Civil and Industrial Buildings) was issued in 1974.

Level of the design lateral seismic load

Interestingly, the level of the design horizontal seismic load (about 10% of the weight of the building), which was proposed in 1909 in Italy, and also used in Japan in the first half of the twentieth century, has been maintained in the seismic regulations for the majority of buildings up to the latest generation of regulations, when, on average, the design horizontal seismic load increased. An exception was Japan, where the seismic design loads increased to 20% of the weight already in 1950. The value of about 10%, proposed in Italy, was based on studies of three buildings which survived the 1908 Messina Earthquake. However, this study was, apparently, not known in other parts of the world. The Japanese engineer Naito wrote: "In Japan, as in other seismic countries, it is required by the building code [from 1924 onward] to take into account a horizontal force of at least 0.1 of the gravity weight, acting on every part of the building. But this seismic coefficient of 0.1 of gravity has no scientific basis either from past experience or from possible occurrence in the future. There is no sound basis for this factor, except that the acceleration of the Kwanto earthquake for the first strong portion as established from the seismographic records obtained at the Tokyo Imperial University was of this order." (Reitherman 2012, p. 172). Freeman (1930) expressed a similar opinion: "There is a current notion fostered by seismologists, and incorporated in the tentative building laws of several California cities, that the engineer should work to a seismic coefficient of 1/10 g. … Traced back to the source this rule is found to be a matter of opinion, not of measurement; a product, not of the seismometer, but of the "guessometer"."

Explanations as to why 10% of the weight of a building is an adequate design horizontal seismic load have changed over time. The seismic coefficient, which represents the ratio between the seismic load and the weight (plus some portion of the live load) depends on the ground acceleration and the dynamic characteristics of the structure. Initially, structures were assumed to be rigid, having the same acceleration as the ground. At the beginning of the twentieth century instruments for the recording of strong ground motion were not available. Some estimates of the level of maximum ground accelerations were obtained from observations of the sliding and overturning of rigid bodies such as stone cemetery monuments. Freeman (1932, p. 76) prepared a table with the relations between the Rossi–Forel intensity and peak ground acceleration, as proposed by six different authors. The values of accelerations in m/s2 for intensities VIII, IX and X were within the ranges 0.5–1.0 (mean 0.58), 1.0–2.0 (mean 1.23) and 2.5–3.0 (mean 2.62), respectively. Note that the intensity IX on the Rossi-Forel scale ("Partial or total destruction of some buildings") approximately corresponds to intensity IX according to the EMS. The data clearly indicate that the values of the peak ground acceleration were grossly underestimated.

The first strong motion accelerograms were recorded only in 1933, during the Long Beach earthquake. Maximum acceleration values did not significantly deviate from 0.1 g until 1940, when the famous El Centro 1940 accelerogram, with a maximum acceleration of more than 0.3 g, was recorded. At the time of the 1971 San Fernando Earthquake, the peak ground acceleration produced by that earthquake tripled what most engineers of the time thought possible (Reitherman 2012, p. 272). With the awareness that ground acceleration can be much higher than expected, and that very considerable dynamic amplification can occur if the period of the structure is in the range of the predominant periods of the ground motion, both resulting in accelerations of the structure much greater than 0.1 g, it was possible to justify a seismic coefficient of about 10% only due to the favourable influence of several factors, mainly energy dissipation in the inelastic range, and so-called overstrength, i.e. the strength capacity above that required by the code design loads. In the 1974 edition of the SEAOC code, for the first time the basis for the design load levels was made explicit in the Commentary: "The minimum design forces prescribed by the SEAOC Recommendations are not to be implied as the actual forces to be expected during an earthquake. The actual motions generated by an earthquake may be expected to be significantly greater than the motions used to generate the prescribed minimum design forces. The justification for permitting lower values for design are many-fold and include: increased strength beyond working stress levels, damping contributed by all the building elements, an increase in ductility by the ability of members to yield beyond elastic limits, and other redundant contributions. (SEAOC Seismology Committee 1974, p. 7-C)" (Reitherman 2012, p. 277). More recently, other influences have been studied, e.g. the shape of the uniform hazard spectrum used in seismic design.

In Japan, already Sano and Naito advocated making structures as stiff as possible. Designing stiff and strong structures has remained popular in Japan until the present time. Otani (2004) wrote: "The importance of limiting story drift during an earthquake by providing large stiffness and high lateral resistance should be emphasized in earthquake engineering." Consequently, in Japan the design seismic lateral loads have been, since 1950, generally larger than in the rest of the world. More recently, the level of the design seismic loads has gradually increased, on average, also in other countries. The reasons for this trend are increasing seismic hazard, estimated by new probabilistic seismic hazard analyses and new ground motion databases, and also better care for the limitation of damage.

When comparing seismic coefficients, it should be noted that the design based on allowable stresses used in early codes has been changed to limit stress design, so that the values of seismic coefficients may not be directly comparable.

Present: gradual implementation of nonlinear methods

Introduction

Most buildings experience significant inelastic deformations when affected by strong earthquakes. The gap between measured ground accelerations and the seismic design forces defined in codes was one of the factors which contributed to thinking in quantitative terms beyond the elastic response of structures. At the Second World Conference on Earthquake Engineering in 1960 several leading researchers presented some early significant papers about inelastic response. However, there was a long way to go before the explicit nonlinear analysis found its way into more advanced seismic codes. Initially the most popular approach was the use of force reduction factors, and this approach is still popular today. Although this concept for taking into account the influence of inelastic behaviour in linear analysis has served the profession well for several decades, a truly realistic assessment of structural behaviour in the inelastic range can be made only by means of nonlinear analysis. It should be noted, however, that "good nonlinear analysis will not trigger good designs but it will, hopefully, prevent bad designs." (Krawinkler 2006). Moreover: "In concept, the simplest method that achieves the intended objective is the best one. The more complex the nonlinear analysis method, the more ambiguous the decision and interpretation process is. … Good and complex are not synonymous, and in many cases they are conflicting." (Krawinkler 2006).

The current developments of the analysis procedures in seismic codes are characterized by a gradual implementation of nonlinear analysis, which should be able to explicitly simulate the second basic feature of structural response to strong seismic ground motion, i.e. inelastic behaviour. For such nonlinear analyses, data about the structure have to be known, so they are very well suited for the analysis of existing structures. In the case of newly designed structures, a preliminary design has to be made before starting a nonlinear analysis. Typical structural response measures that form the output from such an analysis (also called "engineering demand parameters") are: the storey drifts, the deformations of the "deformation-controlled" components, and the force demands in "force-controlled" (i.e. brittle) components that, in contemporary buildings, are expected to remain elastic. Basically, a designer performing a nonlinear analysis should think in terms of deformations rather than in terms of forces. In principle, all displacement-based procedures require a nonlinear analysis.

There are two groups of methods for nonlinear seismic analysis: response-history analysis, and static (pushover-based) analysis, each with several options. They will be discussed in the next two sections. An excellent guide on nonlinear methods for practicing engineers was prepared by Deierlein et al. (2010).

Nonlinear response history analysis (NRHA)

Nonlinear response history analysis (NRHA) is the most advanced deterministic analysis method available today. It represents a rigorous approach, and is irreplaceable for the research and for the design or assessment of important buildings. However, due to its complexity, it has, in practice, been mostly used only for special structures. NRHA is not only computationally demanding (a problem whose importance has been gradually reduced due to the development of advanced hardware and software), but also requires additional data which are not needed in pushover-based nonlinear analysis: a suite of accelerograms, and data about the hysteretic behaviour of structural members. A consensus about the proper way to model viscous damping, in the case of inelastic structural response, has not yet been reached. A wide range of assumptions are needed in all steps of the process, from ground motion selection to nonlinear modelling. Many of these assumptions are based on judgement. Blind predictions of the results of a bridge column test (see Sect. 1) are a good example of potential problems which can arise when NRHA is applied. Moreover, the complete analysis procedure is less transparent than in the case of simpler methods. For this reason, the great majority of codes which permit the use of NRHA require an independent review of the results of such analyses.

The advantages and disadvantages of NRHA have been summarized in a recent paper by the authors representing both academia and practice in the United States (Haselton et al. 2017). The advantages include "the ability to model a wide variety of nonlinear material behaviors, geometric nonlinearities (including large displacement effects), gap opening and contact behavior, and non-classical damping, and to identify the likely spatial and temporal distributions of inelasticity. Nonlinear response history analysis also has several disadvantages, including increased effort to develop the analytical model, increased time to perform the analysis (which is often complicated by difficulties in obtaining converged solutions), sensitivity of computed response to system parameters, large amounts of analysis results to evaluate and the inapplicability of superposition to combine non-seismic and seismic load effects." Moreover, "seemingly minor differences in the way damping is included, hysteretic characteristics are modeled, or ground motions are scaled, can result in substantially different predictions of response. … The provisions governing nonlinear response history analysis are generally non-prescriptive in nature and require significant judgment on the part of the engineer performing the work."

To the author's knowledge, the first code in which the response-history analysis was implemented was the seismic code adopted in the former Yugoslavia in 1981 (JUS 1981). In this code, a "dynamic analysis method", meant as a linear and nonlinear response-history analysis, was included. In the code, it was stated: "By means of such an analysis the stresses and deformations occurring in the structure for the design and maximum expected earthquake can be determined, as well as the acceptable level of damage which may occur to the structural and non-structural elements of the building in the case of the maximum expected earthquake." The application of this method was mandatory for all out-of-category, i.e. very important buildings, and for prototypes of prefabricated buildings or structures which are produced industrially in large series. Such a requirement was very advanced (and maybe premature) at that time (Fischinger et al. 2015).

In the USA, the 1991 Uniform Building Code (UBC) was the first to include procedures for the use of NRHA in design work. In that code, response-history analysis was required for base-isolated buildings and buildings incorporating passive energy dissipation systems (Haselton et al. 2017). After that, several codes in different countries included NRHA, typically with few accompanying provisions, leaving the analyst with considerable freedom of choice. Reasonably comprehensive provisions/guidelines have been prepared only for the most recent version of ASCE 7 standard, i.e. ASCE 7-16 (ASCE 2017). An overview of the status of nonlinear analysis in selected countries is provided in Sect. 3.5. In this paper, NRHA will not be discussed in detail.

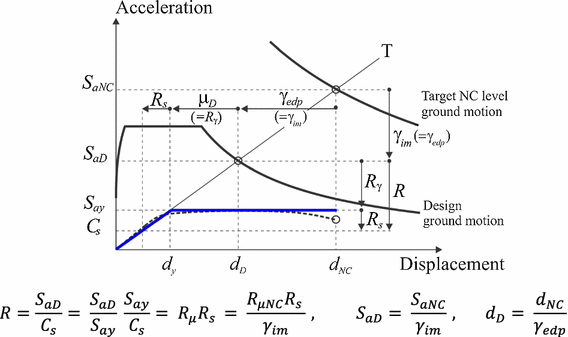

Nonlinear static (pushover) analysis

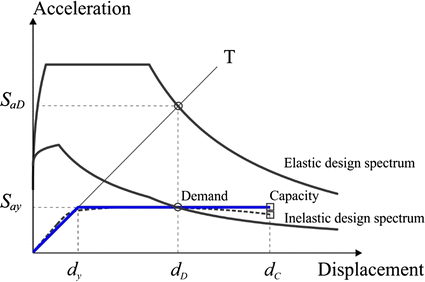

A pushover-based analysis was first introduced in the 1970s as a rapid evaluation procedure (Freeman et al. 1975). In 1980s, it got the name Capacity Spectrum Method (CSM). The method was also developed into a design verification procedure for the Tri-services (Army, Navy, and Air Force) "Seismic Design Guidelines for Essential Buildings" manual (Army 1986). An important milestone was the paper by Mahaney et al. (1993) in which the acceleration–displacement response spectrum (ADRS, later also called AD) format was introduced, enabling visualization of the assessment procedure. In 1996, CSM was adopted in the guidelines for Seismic Evaluation and Retrofit of Concrete Buildings, ATC 40 (ATC 1996). In order to account for the nonlinear inelastic behaviour of a structural system, effective viscous damping values are applied to the linear-elastic response spectrum (i.e. an "overdamped spectrum") in all CSM formulations. In the N2 method, initially developed by Fajfar and Fischinger (1987, 1989), and later formulated in acceleration–displacement (AD) format (Fajfar 1999, 2000), inelastic spectra are used instead of overdamped elastic spectra. The N2 method has been adopted in EC8 in 2004. In FEMA 273 (1997), the target displacement was determined by the "Coefficient Method". This approach, which has remained in all following FEMA documents, and has also been adopted in the ASCE 41-13 standard (ASCE 2014), resembles the use of inelastic spectra. In the United States and elsewhere, the use of pushover-based procedures has accelerated since the publication of the ATC 40 and FEMA 273 documents. In this section, the discussion will be limited to the variant of the pushover-based method using inelastic spectra. A comprehensive summary of pushover analysis procedures is provided in Aydinoğlu and Önem (2010).

A simple pushover approach, which could be applied at the storey level and used for the analysis of the seismic resistance of low-rise masonry buildings, was developed in the late 1970s by Tomaževič (1978). This approach was adopted also in a regional code for the retrofitting of masonry buildings after the 1976 Friuli earthquake in the Italian region of Friuli-Venezia Guilia (Regione Autonoma Friuli-Venezia Giulia 1977).

Pushover-based methods combine nonlinear static (i.e. pushover) analysis with the response spectrum approach. Seismic demand can be determined for an equivalent single-degree-of-freedom (SDOF) system from an inelastic response spectrum (or an overdamped elastic response spectrum). A transformation of the multi-degree-of-freedom (MDOF) system to an equivalent SDOF system is needed. This transformation, which represents the main limitation of the applicability of pushover-based methods, would be exact only in the case that the analysed structure vibrated in a single mode with a deformation shape that did not change over time. This condition is, however, fulfilled only in the case of a linear elastic structure with the negligible influence of higher modes. Nevertheless, the assumption of a single time-invariant mode is used in pushover-based methods for inelastic structures, as an approximation.

Pushover-based analyses can be used as a rational practice-oriented tool for the seismic analysis. Compared to traditional elastic analyses, this kind of analysis provides a wealth of additional important information about the expected structural response, as well as a helpful insight into the structural aspects which control performance during severe earthquakes. Pushover-based analyses provide data on the strength and ductility of structures which cannot be obtained by elastic analysis. Furthermore, they are able to expose design weaknesses that could remain hidden in an elastic analysis. This means that in most cases they are able to detect the most critical parts of a structure. However, special attention should be paid to potential brittle failures, which are usually not simulated in the structural models. The results of pushover analysis must be checked in order to find out if a brittle failure controls the capacity of the structure.

For practical applications and educational purposes, graphical displays of the procedure are extremely important, even when all the results can be obtained numerically. Pushover-based methods experienced a breakthrough when the acceleration–displacement (AD) format was implemented. Presented graphically in AD format, pushover-based analyses can help designers and researchers to better understand the basic relations between seismic demand and capacity, and between the main structural parameters determining the seismic performance, i.e. stiffness, strength, deformation and ductility. They are a very useful educational tool for the familiarizing of students and practising engineers with general nonlinear seismic behaviour, and with the seismic demand and capacity concepts.

Pushover-based methods are usually applied for the performance evaluation of a known structure, i.e. an existing structure or a newly designed one. However, other types of analysis can, in principle, also be applied and visualised in the AD format. Basically, four quantities define the seismic performance: strength, displacement, ductility and stiffness. Design and/or performance evaluation begins by fixing one or two of them; the others are then determined by calculations. Different approaches differ in the quantities that are chosen at the beginning of the design or evaluation. Let's assume that the approximate mass is known. In the case of seismic performance evaluation, stiffness (period) and strength of the structure have to be known; the displacement and ductility demands are calculated. In direct displacement-based design, the starting points are typically the target displacement and/or ductility demands. The quantities to be determined are stiffness and strength. The conventional force-based design typically starts from the stiffness (which defines the period) and the approximate global ductility capacity. The seismic forces (defining the strength) are then determined, and finally the displacement demand is calculated. Capacity in terms of spectral acceleration can be determined from the capacity in terms of displacements. All these approaches can be easily visualised with the help of Fig. 3.

Comparison of demand and capacity in the acceleration–displacement (AD) format

Full size image

Note that, in all cases, the strength is the actual strength and not the design base shear according to seismic codes, which is in all practical cases less than the actual strength. Note also that stiffness and strength are usually related quantities.

Compared to NRHA, pushover-based methods are a much simpler and more transparent tool, requiring simpler input data. The amount of computation time is only a fraction of that required by NRHA and the use of the results obtained is straightforward. Of course, the above-listed advantages of pushover-based methods have to be weighed against their lower accuracy compared to NRHA, and against their limitations. It should be noted that pushover analyses are approximate in nature, and based on static loading. They have no strict theoretical background. In spite of extensions like those discussed in the next section, they may not provide acceptable results in the case of some building structures with important influences of higher modes, including torsion. For example, they may detect only the first local mechanism that will form, while not exposing other weaknesses that will be generated when the structure's dynamic characteristics change after formation of the first local mechanism. Pushover-based analysis is an excellent tool for understanding inelastic structural behaviour. When used for quantification purposes, the appropriate limitations should be observed. Additional discussion on the advantages, disadvantages and limitations of pushover analysis is available in, for instance Krawinkler and Seneviratna (1998), Fajfar (2000) and Krawinkler (2006).

The influence of higher modes in elevation and in plan (torsion)

The main assumption in basic pushover-based methods is that the structure vibrates predominantly in a single mode. This assumption is sometimes not fulfilled, especially in high-rise buildings, where higher mode effects may be important along the height of the building, and/or in plan-asymmetric buildings, where substantial torsional influences can occur. Several approaches have been proposed for taking the higher modes (including torsion) into account. The most popular is Modal pushover analysis, developed by Chopra and Goel (2002). Some of the proposed approaches require quite complex analyses. Baros and Anagnostopoulos (2008) wrote: "The nonlinear static pushover analyses were introduced as simple methods … Refining them to a degree that may not be justified by their underlying assumptions and making them more complicated to apply than even the nonlinear response-history analysis … is certainly not justified and defeats the purpose of using such procedures."

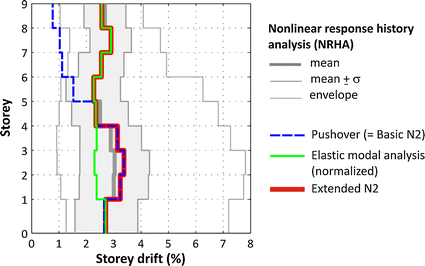

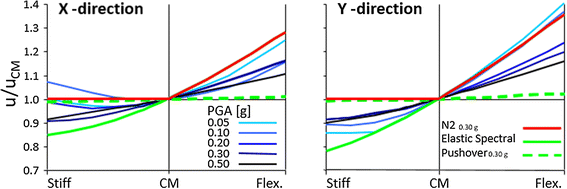

In this section, the extended N2 method (Kreslin and Fajfar 2012), which combines two earlier approaches, taking into account torsion (Fajfar et al. 2005) and higher mode effects in elevation (Kreslin and Fajfar 2011), into a single procedure enabling the analysis of plan-asymmetric medium- and high-rise buildings, will be discussed. The method is based on the assumption that the structure remains in the elastic range in higher modes. The seismic demand in terms of displacements and storey drifts can be obtained by combining together the deformation quantities obtained by the basic pushover analysis and those obtained by the elastic modal response spectrum analysis. Both analyses are standard procedures which have been implemented in most commercial computer programs. Thus the approach is conceptually simple, straightforward, and transparent.

In the elastic range, the vibration in different modes can be decoupled, with the analysis performed for each mode and for each component of the seismic ground motion separately. The results obtained for different modes using design spectra are then combined together by means of approximate combination rules, like the "Square Root Sum of Squares" (SRSS) or "Complete Quadratic Combination" (CQC) rule. The SRSS rule can be used to combine the results for different components of the ground motion. This approach has been widely accepted and used in practice, in spite of the approximations involved in the combination rules. In the inelastic range, the superposition theoretically does not apply. However, the coupling between vibrations in different modes is usually weak (Chopra 2017, p. 819). Thus, for the majority of structures, some kind of superposition can be applied as an approximation in the inelastic range, too.

It has been observed that higher mode effects depend to a considerable extent on the magnitude of the plastic deformations. In general, higher mode effects in plan and in elevation decrease with increasing ground motion intensity. Thus, conservative estimates of amplification due to higher mode effects can usually be obtained by elastic modal response spectrum analysis. The results of elastic analysis, properly normalised, usually represent an upper bound to the results obtained for different intensities of the ground motion in those parts of the structure where higher mode effects are important, i.e. in the upper part of medium- or high-rise buildings, and at the flexible sides of plan-asymmetric buildings. An exception are the stiff sides of plan-asymmetric buildings. If the building is torsionally stiff, usually a de-amplification occurs at the stiff side, which mostly decreases with increasing plastic deformations. If the building is torsionally flexible, amplifications may also occur at the stiff side, which may be larger in the case of inelastic behaviour than in the case of elastic response.

The extended N2 method has been developed taking into account most of the above observations. It is assumed that the higher mode effects in the inelastic range are the same as in the elastic range, and that a (in most cases conservative) estimate of the distribution of seismic demand throughout the structure can be obtained by enveloping the seismic demand in terms of the deformations obtained by the basic pushover analysis, which neglects higher mode effects, and the normalized (the same roof displacement as in pushover analysis) results of elastic modal analysis, which includes higher mode effects. De-amplification due to torsion is not taken into account. The target displacements are determined as in the basic N2 method, or by any other procedure. Higher mode effects in plan (torsion) and in elevation are taken into account simultaneously. The analysis has to be performed independently for two horizontal directions of the seismic action. Combination of the results for the two horizontal components of the seismic action is performed only for the results obtained by elastic analysis. The combined internal forces, e.g. the bending moments and shear forces, should be consistent with the deformations. For more details, see Kreslin and Fajfar (2012) and Fardis et al. (2015). Note that a variant of Modal pushover analysis is also based on the assumption that the vibration of the building in higher modes is linearly elastic (Chopra et al. 2004).

Two examples of the results obtained by the extended N2 method are presented in Figs. 4 and 5. Figure 4 shows the storey drifts along the elevation of the 9-story high steel "Los Angeles building" which was investigated within the scope of the SAC project in the United States. Shown are results of the NRHA analysis, of the basic pushover analysis, of the elastic modal response spectrum analysis, and of the extended N2 method. Figure 5 shows the normalized displacements, i.e. the displacements at different locations in the plan divided by the displacement of the mass centre CM, obtained by NRHA for different intensities of ground motion, by the basic pushover analysis, by the elastic modal response spectrum analysis, and by the extended N2 method. The structural model corresponds to the SPEAR building which was tested within the scope of an European project.

(adapted from Kreslin and Fajfar 2011)

Comparison of storey drifts obtained by different procedures for the 9-storey LA building

Full size image

(adapted from Fajfar et al. 2005)

Comparison of normalized roof displacement in plan obtained by NRHA analysis (mean values) for different intensities, elastic response spectrum analysis, pushover analysis, and the extended N2 method for the SPEAR building

Full size image

The extended N2 method will be most probably implemented in the expected revised version of EC8. At the time when the current Part 1 of EC8 was finalised, the extended version of the N2 method for plan-asymmetric buildings had not yet been fully developed. Nevertheless, based on the preliminary results, the clause "Procedure for the estimation of torsional effects" was introduced, in which it was stated that "pushover analysis may significantly underestimate deformations at the stiff/strong side of a torsionally flexible structure". It was also stated that "for such structures, displacements at the stiff/strong side shall be increased, compared to those in the corresponding torsionally balanced structure" and that "this requirement is deemed to be satisfied if the amplification factor to be applied to the displacements of the stiff/strong side is based on the results of an elastic modal analysis of the spatial model".

ASCE 41-13 basically uses the same idea of enveloping the results of the two analysis procedures in order to take into account the higher modes in elevation. In C7.3.2.1 it is stated "Where the NSP [Nonlinear Static Procedure] is used on a structure that has significant higher mode response, the LDP [Linear Dynamic Procedure, typically the modal response spectrum analysis] is also used to verify the adequacy of the evaluation or retrofit." The same recommendation is included in the very recent New Zealand guidelines (NZSEE 2017).

Nonlinear analysis in current codes

In this section, an attempt has been made to present an overview of the status of nonlinear analysis in the codes of different countries and of its use in practice. The material is based on the responses of the author's colleagues to a quick survey and on the available literature. It can be observed that nonlinear analysis (NHRA more often than pushover analysis) has been adopted in the majority of the respective countries as an optional procedure which, however, is in some codes the mandatory for tall buildings and for special structural systems, e.g. base-isolated buildings. In next sections, these countries and regions are listed in alphabetical order.

Canada

The latest approved version of the National Building Code of Canada is NBCC 2015. The code applies only to new buildings; there is no code for existing building. The Code is accompanied by a Commentary, which explains the intent of the code and how best to meet it. The provisions or specifications given in the Commentary are not mandatory, but act as guidelines. According to the Code, dynamic analysis is the preferred method of analysis. The dynamic analysis procedure may be response spectrum analysis, or linear time history analysis, or NRHA. In the last case a special study is required. The Commentary gives the specifics of the nonlinear analysis procedure and the conditions for a special study. Inter alia, an independent design review is required when NRHA is used. Consultants (particularly in British Columbia) often use response spectrum analysis for the design of high-rise irregular buildings, and for important buildings such as hospitals. Linear time history analysis is used only infrequently, whereas NRHA is not used in practice. Nonlinear analysis is used mainly for the evaluation of existing buildings. It is considered to be a very complicated task, being hardly ever justified for the design of a new building. Pushover analysis is not directly referred to in the NBCC.

Chile

The present design building code, (NCh 433) does not consider and does not allow nonlinear analysis (static or dynamic). Nevertheless, all buildings taller than four storeys are required to have a peer review. This review is generally done using parallel modelling and design. Seismic designs of some of the tallest buildings have been reviewed using pushover analysis. The code for base isolated buildings (NCh2745) requires NRHA in all irregular or tall isolated structures. Nonlinear properties are considered, at least for isolators. The code for industrial structures (NCh2369) has always allowed for nonlinear analysis (static and dynamic) but it has been used only on rare occasions, for structures with energy dissipation devices and complex structures. The new design building code, which has been drafted and is currently undergoing an evaluation process, does, however, take into account nonlinear analysis and performance-based design. Nonlinear structural analysis is taught at the undergraduate level at the universities. So young designers are able to use standard software that includes nonlinear static and dynamic analysis. In advanced design offices, nonlinear analysis is regularly used.

China

According to the 2010 version of the Chinese Code for Seismic Design of Buildings (GB 50011-2010), linear analysis (static or dynamic) is the main procedure. "For irregular building structures with obvious weak positions that may result in serious seismic damage, the elasto-plastic deformation analysis under the action of rare earthquake shall be carried out … In this analysis, the elasto-plastic static analysing method or elasto-plastic time history analysing method may be adopted depending on the structural characteristics". In some cases simplified nonlinear analysis can be also used. The code has a separate section for performance-based design, where it is stated that either linear analysis with increased damping, or nonlinear static or dynamic analysis can be used for performance states where nonlinear behaviour is expected. In the case of tall buildings, linear analysis is performed for the frequent earthquake level, whereas nonlinear analysis, including pushover analysis and NHRA, is used for the major earthquake level. The type of the required nonlinear analysis depends on the height and complexity of the structure. NRHA should be performed for buildings with heights of more than 200 m or with severe irregularities. Buildings higher than 300 m have to be analyzed using two or more different computer programs in order to validate the results (Jiang et al. 2012).

Europe

Most countries in Europe use the European standard EN 1998 Design of structures for earthquake resistance (called Eurocode 8 or EC8), which consists of six parts. Part 1, enforced in 2004, applies to new buildings, and Part 3, enforced in 2005, applies to existing buildings. The main analysis procedure is linear modal response spectrum analysis. The equivalent static lateral force procedure can be used under some restrictions. Nonlinear analysis is permitted. It can be static (pushover) or NRHA. The basic requirements for pushover analysis (the N2 method) are provided in the main text of Part 1, whereas more details are given in an informative annex. NRHA is regulated very deficiently by only three clauses. In the revised version, which is currently under development, pushover analysis will play a more prominent role, whereas, in the draft of the revision, NRHA is still not adequately regulated. In practice, the use of NRHA is extremely rare, whereas the use of pushover analysis varies from country to country.

India

Nonlinear analysis has not been included in the building code, and is not used in design. No changes are expected in the near future.

Iran

The Iranian Seismic Code was updated in 2014 to the 4th Edition. The analysis procedures include linear procedures, i.e. linear static, response spectrum, and linear response history analyses, and nonlinear procedures, i.e. pushover and NRHA. The linear static procedure is permitted for all buildings with heights of up to 50 m with the exception of some irregular cases, whereas response spectrum and linear response history analyses are permitted for all cases. Pushover analysis and NRHA can be used for all kinds of buildings. However, one of the linear procedures should also be performed in addition to nonlinear analysis. In the 50% draft version of the code, the use of nonlinear analyses was encouraged by a 20% reduction in force and drift limitations. In the final version, such a reduction is not permitted. The pushover method is based on the EC8 and NEHRP 2009 approaches. A standard for the seismic rehabilitation of existing buildings (Standard No. 360) exists. The first edition was mainly based on FEMA 356, and the second edition is based mainly on ASCE 41-06. This standard has been widely used in retrofitting projects, and also sometimes in design projects. Pushover analysis is the most frequently used analysis method in seismic vulnerability studies of existing public and governmental buildings. It is used for all building types, including masonry buildings. The most popular is the coefficient method.

Japan

The seismic design requirements are specified in the Building Standard Law. In 1981 a two-phase seismic design procedure was introduced, which is still normally used in design offices. The performance-based approach was implemented in 2000. Most engineered steel and concrete buildings are designed with the aid of nonlinear analysis (pushover or NRHA) in the second phase design. For buildings with heights of less than 60 m, pushover analysis is usually conducted in order to check the ultimate resistance of members and of the building. For high-rise buildings (with heights of more than 60 m), NRHA is required. Usually, first a pushover analysis of a realistic 3D model is conducted in order to define the relationship between the storey-shear and the storey-drift for each storey. These relationships are modelled by means of trilinear models. NRHA is performed for a simplified structural model where stick element models (shear or shear-flexure model) are used. Very rarely, a complex 3D model is directly used for NRHA. Designs are subject to technical peer review. The software needs to be evaluated by the Minister of the Land, Infrastructure, Transport and Tourism.

Mexico

The present code (MOC-2008) permits NRHA. The design base shear determined in dynamic analysis should not be less than 80% of the base shear determined by the static force procedure. Over the last 10 years NRHA has been used in Mexico City for very tall buildings (having from 30 to 60 storeys), mainly because this was required by international insurance companies. Static pushover analysis has been seldom used in practice. The new code, which is expected to be published at the end of 2017, will require dynamic spectral analysis for the design of the majority of structures, and the results will need to be verified by a NRHA in the case of very tall or large buildings non-complying with regularity requirements.

New Zealand

The NZ seismic standard (NZS1170.5) requires either a response spectrum analysis or a response-history analysis (linear or nonlinear) in order to obtain local member actions in tall or torsionally sensitive buildings. Even in the case of other buildings (which are permitted to be analysed by equivalent static linear analysis), the designers can opt to use more advanced analysis methods (so using pushover analysis in such cases may perhaps be argued to be acceptable). In practice, majority of structures are still designed using the linear static approach, but NRHA is becoming more and more common. The use of the linear static method is also the most common method used when assessing existing buildings, although pushover analysis has also been used. Very recently, technical guidelines for the engineering assessment of existing buildings were published (NZSEE 2017). They recommend the use of SLaMA (Simple Lateral Mechanism Analysis) as a starting point for any detailed seismic assessment. SLaMA represents a form of static nonlinear (pushover) analysis method, which is a hand calculation upper-bound approach defined as "an analysis involving the combination of simple strength to deformation representations of identified mechanisms to determine the strength to deformation (push-over) relationship for the building as a whole". In addition to standard linear analyses and SLaMA, the standard nonlinear pushover procedure and NRHA are also included in the guidelines.

Taivan

The seismic analysis procedures have remained practically unchanged since the 2005 version of the provisions. In the case of new buildings, linear analysis using the response spectrum method is a very common practice, regardless of the building's regularity or height. Pushover analysis is also very popular as a means to determine the peak roof displacement, the load factor to design the basement, the ground floor diaphragm, and the foundation or piling. Linear response history analysis is not common. NRHA is sometimes conducted for buildings using oil or metallic dampers. In the case of tall buildings with or without dampers, some engineers use general-purpose computer programs to evaluate the peak story drift demands, the deformation demands on structural members, and the force demands on columns, the 1st floor diaphragm, and the foundation or basement. Base-isolated buildings have become more popular, and engineers like to use nonlinear springs for base isolators only. Existing government buildings have to be retrofitted up to the current standard for new constructions. Pushover analysis is very common for the evaluation of existing condition of buildings and verifying the retrofit design.

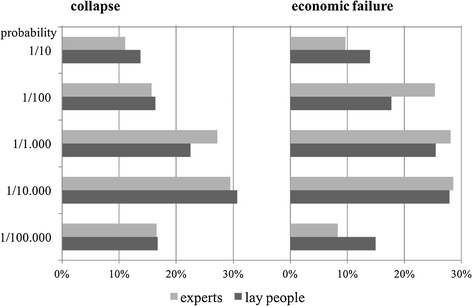

Turkey